Getting Started

OpenAI-HK provides OpenAI transit interface services such as GPT, Dall-e-3, gpt-4o o1 o3 gpt-image-1, etc. You need to pay a fee before you can call it.

OpenAI-HK also provides Suno, Flux, Midjourney, Google Veo3, Riffusion, etc., covering AI Music, AI Video, AI Image, AI Chat services

API Method

Register an account through openai-hk first.

Log in with github account authorization

After logging in, click: Get key to get the api-key;

Copy the api_key starting with hk- returned by KEY and replace the api_key in the original request header with our own

Example:

Original: sk-sdiL2SMN4D7GBqFhBsYdT2345kFJhwEHGXbU5RzCM8dS3lrn

Now: hk-wsvj0oyeb0srl8b76xgzolc678996mhwlk3p3y7p3qo9wjdl

The api_key OpenAI is normally started with sk- ,But the api_key is started with hk- in our platform

The base url: api.openai.com changed to api.openai-hk.com

Example:

Original: https://api.openai.com/v1/chat/completions

Now: https://api.openai-hk.com/v1/chat/completions

Examples of Chat Interface

- The example is the dialogue (v1/chat/completions) interface as an example; the official also has many types of results

- For more dialog interface parameters, please refer to the official website document https://platform.openai.com/docs/api-reference/chat/create

- So here's a couple of instances for you.

CURL Instance

curl --request POST \

--url https://api.openai-hk.com/v1/chat/completions \

--header 'Authorization: Bearer hk-替换为你的key' \

-H "Content-Type: application/json" \

--data '{

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": [

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible."

},

{

"role": "user",

"content": "你是chatGPT多少?"

}

]

}'curl --request POST \

--url https://api.openai-hk.com/v1/chat/completions \

--header 'Authorization: Bearer hk-替换为你的key' \

-H "Content-Type: application/json" \

--data '{

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": [

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible."

},

{

"role": "user",

"content": "你是chatGPT多少?"

}

]

}'如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

PHP Instance

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, 'https://api.openai-hk.com/v1/chat/completions');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_POST, 1);

$headers = array();

$headers[] = 'Content-Type: application/json';

$headers[] = 'Authorization: Bearer hk-替换为你的key';

curl_setopt($ch, CURLOPT_HTTPHEADER, $headers);

$data = array(

'max_tokens' => 1200,

'model' => 'gpt-3.5-turbo',

'temperature' => 0.8,

'top_p' => 1,

'presence_penalty' => 1,

'messages' => array(

array(

'role' => 'system',

'content' => 'You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.'

),

array(

'role' => 'user',

'content' => '你是chatGPT多少?'

)

)

);

$data_string = json_encode($data);

curl_setopt($ch, CURLOPT_POSTFIELDS, $data_string);

$result = curl_exec($ch);

if (curl_errno($ch)) {

echo 'Error:' . curl_error($ch);

}

curl_close($ch);

echo $result;

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, 'https://api.openai-hk.com/v1/chat/completions');

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_POST, 1);

$headers = array();

$headers[] = 'Content-Type: application/json';

$headers[] = 'Authorization: Bearer hk-替换为你的key';

curl_setopt($ch, CURLOPT_HTTPHEADER, $headers);

$data = array(

'max_tokens' => 1200,

'model' => 'gpt-3.5-turbo',

'temperature' => 0.8,

'top_p' => 1,

'presence_penalty' => 1,

'messages' => array(

array(

'role' => 'system',

'content' => 'You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.'

),

array(

'role' => 'user',

'content' => '你是chatGPT多少?'

)

)

);

$data_string = json_encode($data);

curl_setopt($ch, CURLOPT_POSTFIELDS, $data_string);

$result = curl_exec($ch);

if (curl_errno($ch)) {

echo 'Error:' . curl_error($ch);

}

curl_close($ch);

echo $result;如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

Python Instance

import requests

import json

url = "https://api.openai-hk.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer hk-替换为你的key"

}

data = {

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": [

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible."

},

{

"role": "user",

"content": "你是chatGPT多少?"

}

]

}

response = requests.post(url, headers=headers, data=json.dumps(data).encode('utf-8') )

result = response.content.decode("utf-8")

print(result)import requests

import json

url = "https://api.openai-hk.com/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer hk-替换为你的key"

}

data = {

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": [

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible."

},

{

"role": "user",

"content": "你是chatGPT多少?"

}

]

}

response = requests.post(url, headers=headers, data=json.dumps(data).encode('utf-8') )

result = response.content.decode("utf-8")

print(result)如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

javascript Instance

const axios = require("axios");

const url = "https://api.openai-hk.com/v1/chat/completions";

const headers = {

"Content-Type": "application/json",

Authorization: "Bearer hk-替换为你的key",

};

const data = {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content:

"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

role: "user",

content: "你是chatGPT多少?",

},

],

};

axios

.post(url, data, { headers })

.then((response) => {

const result = response.data;

console.log(result);

})

.catch((error) => {

console.error(error);

});const axios = require("axios");

const url = "https://api.openai-hk.com/v1/chat/completions";

const headers = {

"Content-Type": "application/json",

Authorization: "Bearer hk-替换为你的key",

};

const data = {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content:

"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

role: "user",

content: "你是chatGPT多少?",

},

],

};

axios

.post(url, data, { headers })

.then((response) => {

const result = response.data;

console.log(result);

})

.catch((error) => {

console.error(error);

});typescript Instance

import axios from "axios";

const url = "https://api.openai-hk.com/v1/chat/completions";

const headers = {

"Content-Type": "application/json",

Authorization: "Bearer hk-your-key",

};

const data = {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content:

"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

role: "user",

content: "Which version are you?",

},

],

};

axios

.post(url, data, { headers })

.then((response) => {

const result = response.data;

console.log(result);

})

.catch((error) => {

console.error(error);

});import axios from "axios";

const url = "https://api.openai-hk.com/v1/chat/completions";

const headers = {

"Content-Type": "application/json",

Authorization: "Bearer hk-your-key",

};

const data = {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content:

"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

role: "user",

content: "Which version are you?",

},

],

};

axios

.post(url, data, { headers })

.then((response) => {

const result = response.data;

console.log(result);

})

.catch((error) => {

console.error(error);

});java Instance

import okhttp3.*;

import java.io.IOException;

public class OpenAIChat {

public static void main(String[] args) throws IOException {

String url = "https://api.openai-hk.com/v1/chat/completions";

OkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

String json = "{\n" +

" \"max_tokens\": 1200,\n" +

" \"model\": \"gpt-3.5-turbo\",\n" +

" \"temperature\": 0.8,\n" +

" \"top_p\": 1,\n" +

" \"presence_penalty\": 1,\n" +

" \"messages\": [\n" +

" {\n" +

" \"role\": \"system\",\n" +

" \"content\": \"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\"\n" +

" },\n" +

" {\n" +

" \"role\": \"user\",\n" +

" \"content\": \"Which version are you?\"\n" +

" }\n" +

" ]\n" +

"}";

RequestBody body = RequestBody.create(mediaType, json);

Request request = new Request.Builder()

.url(url)

.post(body)

.addHeader("Content-Type", "application/json")

.addHeader("Authorization", "Bearer hk-your-key")

.build();

Call call = client.newCall(request);

Response response = call.execute();

String result = response.body().string();

System.out.println(result);

}

}import okhttp3.*;

import java.io.IOException;

public class OpenAIChat {

public static void main(String[] args) throws IOException {

String url = "https://api.openai-hk.com/v1/chat/completions";

OkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/json");

String json = "{\n" +

" \"max_tokens\": 1200,\n" +

" \"model\": \"gpt-3.5-turbo\",\n" +

" \"temperature\": 0.8,\n" +

" \"top_p\": 1,\n" +

" \"presence_penalty\": 1,\n" +

" \"messages\": [\n" +

" {\n" +

" \"role\": \"system\",\n" +

" \"content\": \"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\"\n" +

" },\n" +

" {\n" +

" \"role\": \"user\",\n" +

" \"content\": \"Which version are you?\"\n" +

" }\n" +

" ]\n" +

"}";

RequestBody body = RequestBody.create(mediaType, json);

Request request = new Request.Builder()

.url(url)

.post(body)

.addHeader("Content-Type", "application/json")

.addHeader("Authorization", "Bearer hk-your-key")

.build();

Call call = client.newCall(request);

Response response = call.execute();

String result = response.body().string();

System.out.println(result);

}

}go Instance

package main

import (

"bytes"

"encoding/json"

"fmt"

"net/http"

)

func main() {

url := "https://api.openai-hk.com/v1/chat/completions"

apiKey := "hk-your-key"

payload := map[string]interface{}{

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": []map[string]string{

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

"role": "user",

"content": "Which version are you?",

},

},

}

jsonPayload, err := json.Marshal(payload)

if err != nil {

fmt.Println("Error encoding JSON payload:", err)

return

}

req, err := http.NewRequest("POST", url, bytes.NewBuffer(jsonPayload))

if err != nil {

fmt.Println("Error creating HTTP request:", err)

return

}

req.Header.Set("Authorization", "Bearer "+apiKey)

req.Header.Set("Content-Type", "application/json")

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

fmt.Println("Error making API request:", err)

return

}

defer resp.Body.Close()

fmt.Println("Response HTTP Status:", resp.StatusCode)

}package main

import (

"bytes"

"encoding/json"

"fmt"

"net/http"

)

func main() {

url := "https://api.openai-hk.com/v1/chat/completions"

apiKey := "hk-your-key"

payload := map[string]interface{}{

"max_tokens": 1200,

"model": "gpt-3.5-turbo",

"temperature": 0.8,

"top_p": 1,

"presence_penalty": 1,

"messages": []map[string]string{

{

"role": "system",

"content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.",

},

{

"role": "user",

"content": "Which version are you?",

},

},

}

jsonPayload, err := json.Marshal(payload)

if err != nil {

fmt.Println("Error encoding JSON payload:", err)

return

}

req, err := http.NewRequest("POST", url, bytes.NewBuffer(jsonPayload))

if err != nil {

fmt.Println("Error creating HTTP request:", err)

return

}

req.Header.Set("Authorization", "Bearer "+apiKey)

req.Header.Set("Content-Type", "application/json")

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

fmt.Println("Error making API request:", err)

return

}

defer resp.Body.Close()

fmt.Println("Response HTTP Status:", resp.StatusCode)

}Js implementation sse typing effect

Tips

Node.js can be run backend; it also supports browser mode; it should be noted that if it is a frontend, pay attention to protecting your key.

How to protect the key? You can cooperate with nginx to put the "Authorization": "Bearer hk - your key" in the header part into nginx.

//please import `axios`

const chatGPT = (msg, opt) => {

let content = "";

const dataPar = (data) => {

let rz = {};

let dz = data.split("data:", 2);

const str = dz[1].trim();

if (str == "[DONE]") rz = { finish: true, text: "" };

else {

rz = JSON.parse(str);

rz.text = rz.choices[0].delta.content;

}

return rz;

};

const dd = (data) => {

let arr = data.trim().split("\n\n");

let rz = { text: "", arr: [] };

const atext = arr.map((v) => {

const aa = dataPar(v);

return aa.text;

});

rz.arr = atext;

rz.text = atext.join("");

if (opt.onMessage) opt.onMessage(rz);

return rz;

};

return new Promise((resolve, reject) => {

axios({

method: "post",

url: "https://api.openai-hk.com/v1/chat/completions",

data: {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content: opt.system ?? "You are ChatGPT",

},

{

role: "user",

content: msg,

},

],

stream: true, // data ouput with stream

},

headers: {

"Content-Type": "application/json",

Authorization: "Bearer hk-your-key",

},

onDownloadProgress: (e) => dd(e.target.responseText),

})

.then((d) => resolve(dd(d.data)))

.catch((e) => reject(e));

});

};

chatGPT("Which version are you?", {

//system:'',

onMessage: (d) => console.log("The process result:", d.text),

})

.then((d) => console.log("✅The final result: ", d))

.catch((e) => console.log("❎Error: ", e));//please import `axios`

const chatGPT = (msg, opt) => {

let content = "";

const dataPar = (data) => {

let rz = {};

let dz = data.split("data:", 2);

const str = dz[1].trim();

if (str == "[DONE]") rz = { finish: true, text: "" };

else {

rz = JSON.parse(str);

rz.text = rz.choices[0].delta.content;

}

return rz;

};

const dd = (data) => {

let arr = data.trim().split("\n\n");

let rz = { text: "", arr: [] };

const atext = arr.map((v) => {

const aa = dataPar(v);

return aa.text;

});

rz.arr = atext;

rz.text = atext.join("");

if (opt.onMessage) opt.onMessage(rz);

return rz;

};

return new Promise((resolve, reject) => {

axios({

method: "post",

url: "https://api.openai-hk.com/v1/chat/completions",

data: {

max_tokens: 1200,

model: "gpt-3.5-turbo",

temperature: 0.8,

top_p: 1,

presence_penalty: 1,

messages: [

{

role: "system",

content: opt.system ?? "You are ChatGPT",

},

{

role: "user",

content: msg,

},

],

stream: true, // data ouput with stream

},

headers: {

"Content-Type": "application/json",

Authorization: "Bearer hk-your-key",

},

onDownloadProgress: (e) => dd(e.target.responseText),

})

.then((d) => resolve(dd(d.data)))

.catch((e) => reject(e));

});

};

chatGPT("Which version are you?", {

//system:'',

onMessage: (d) => console.log("The process result:", d.text),

})

.then((d) => console.log("✅The final result: ", d))

.catch((e) => console.log("❎Error: ", e));Embeddings interface

node.js Example

const fetch = require("node-fetch");

fetch("https://api.openai-hk.com/v1/embeddings", {

method: "POST",

headers: {

Authorization: "Bearer hk-your-key",

"Content-Type": "application/json",

},

body: JSON.stringify({

input: "Please use ChatGPT",

model: "text-embedding-ada-002",

}),

});const fetch = require("node-fetch");

fetch("https://api.openai-hk.com/v1/embeddings", {

method: "POST",

headers: {

Authorization: "Bearer hk-your-key",

"Content-Type": "application/json",

},

body: JSON.stringify({

input: "Please use ChatGPT",

model: "text-embedding-ada-002",

}),

});Moderations interface

node.js Example

const fetch = require("node-fetch");

fetch("https://api.openai-hk.com/v1/moderations", {

method: "POST",

headers: {

Authorization: "Bearer hk-your-key",

"Content-Type": "application/json",

},

body: JSON.stringify({ input: "something wrong with you" }),

});const fetch = require("node-fetch");

fetch("https://api.openai-hk.com/v1/moderations", {

method: "POST",

headers: {

Authorization: "Bearer hk-your-key",

"Content-Type": "application/json",

},

body: JSON.stringify({ input: "something wrong with you" }),

});Many Applications

- There are many applications in reality, you can choose the one you like.

chatgpt-web

docker Start with the default model gpt-3.5

docker run --name chatgpt-web -d -p 6011:3002 \

--env OPENAI_API_KEY=hk-your-key \

--env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \

--env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webdocker run --name chatgpt-web -d -p 6011:3002 \

--env OPENAI_API_KEY=hk-your-key \

--env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \

--env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webchatgpt-web gpt-4

The default model is gpt-3.5 How to create a default model gpt-4.0? Use environement variable OPENAI_API_MODEL

docker run --name chatgpt-web -d -p 6040:3002 \

--env OPENAI_API_KEY=hk-your-key \

--env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \

--env OPENAI_API_MODEL=gpt-4-0613 \

--env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webdocker run --name chatgpt-web -d -p 6040:3002 \

--env OPENAI_API_KEY=hk-your-key \

--env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \

--env OPENAI_API_MODEL=gpt-4-0613 \

--env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webthen visit http://127.0.0.1:6040

What are the available models?

| Model | Description |

|---|---|

| gpt-3.5-turbo-0613 | 3.5 version with 4k context support |

| gpt-3.5-turbo-16k-0613 | 3.5 version with 16k context support for longer contexts, more expensive than regular 3.5 |

| gpt-3.5-turbo-1106 | 3.5 version with 16k context, priced the same as 3.5 with 4k |

| gpt-4 | 8k version of 4.0 with context support up to 8k |

| gpt-4-0613 | The 0613 version of 4.0 |

| gpt-4-1106-preview | 128k version of 4.0, priced at half the cost of regular gpt-4 |

| gpt-4-vision-preview | Same token pricing as gpt-4-1106-preview, supports 4k, with additional fees for images |

| dall-e-3 | OpenAI's image generation model |

| Midjourney | Non-OpenAI image generation product |

The Effect

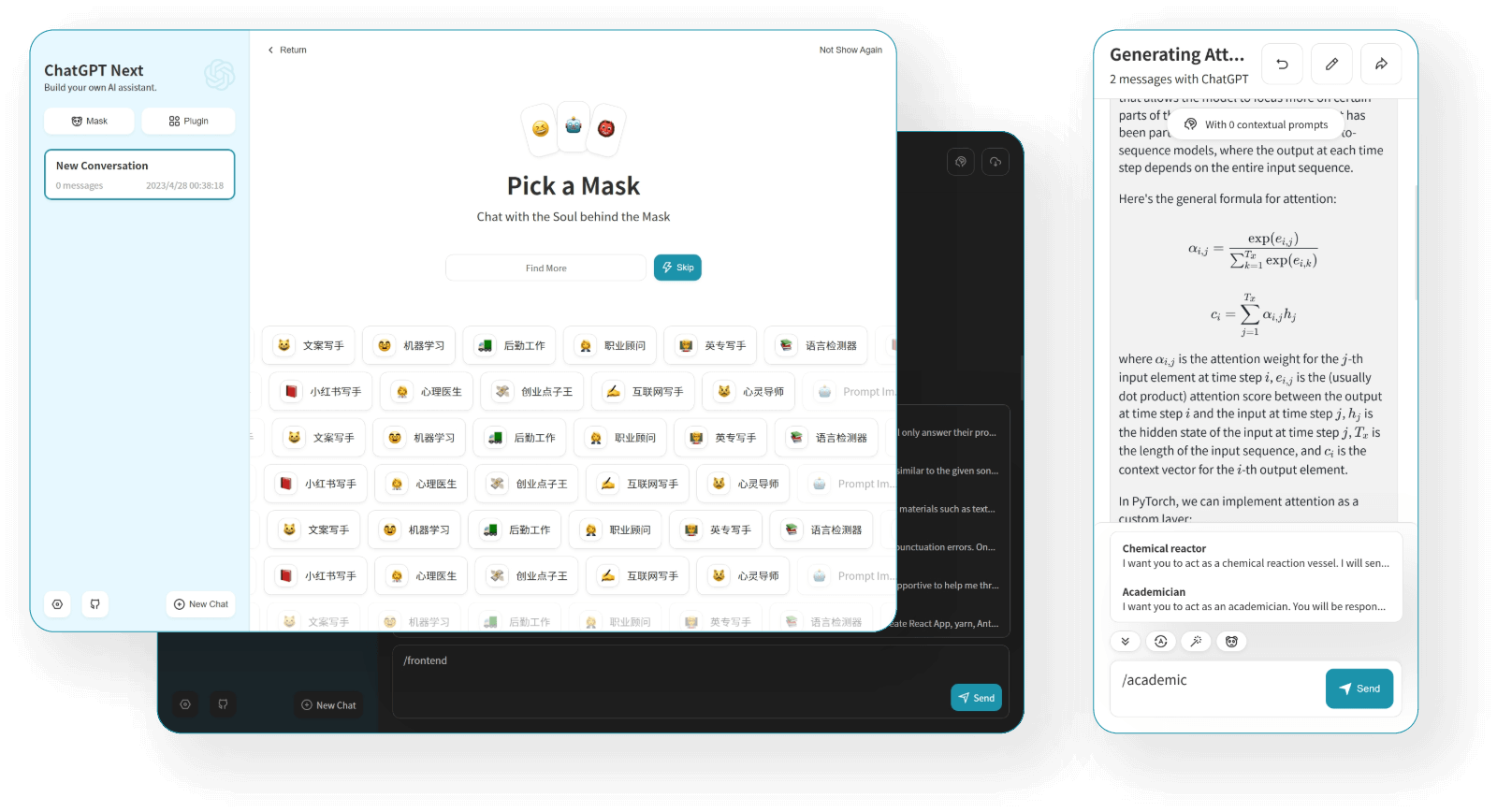

chatgpt-next-web

docker run --name chatgpt-next-web -d -p 6013:3000 \

-e OPENAI_API_KEY="hk-your-key" \

-e BASE_URL=https://api.openai-hk.com yidadaa/chatgpt-next-webdocker run --name chatgpt-next-web -d -p 6013:3000 \

-e OPENAI_API_KEY="hk-your-key" \

-e BASE_URL=https://api.openai-hk.com yidadaa/chatgpt-next-webThe Effect

other

If You Need Additional Applications, Please Contact Our Customer Service

The Online Chat App

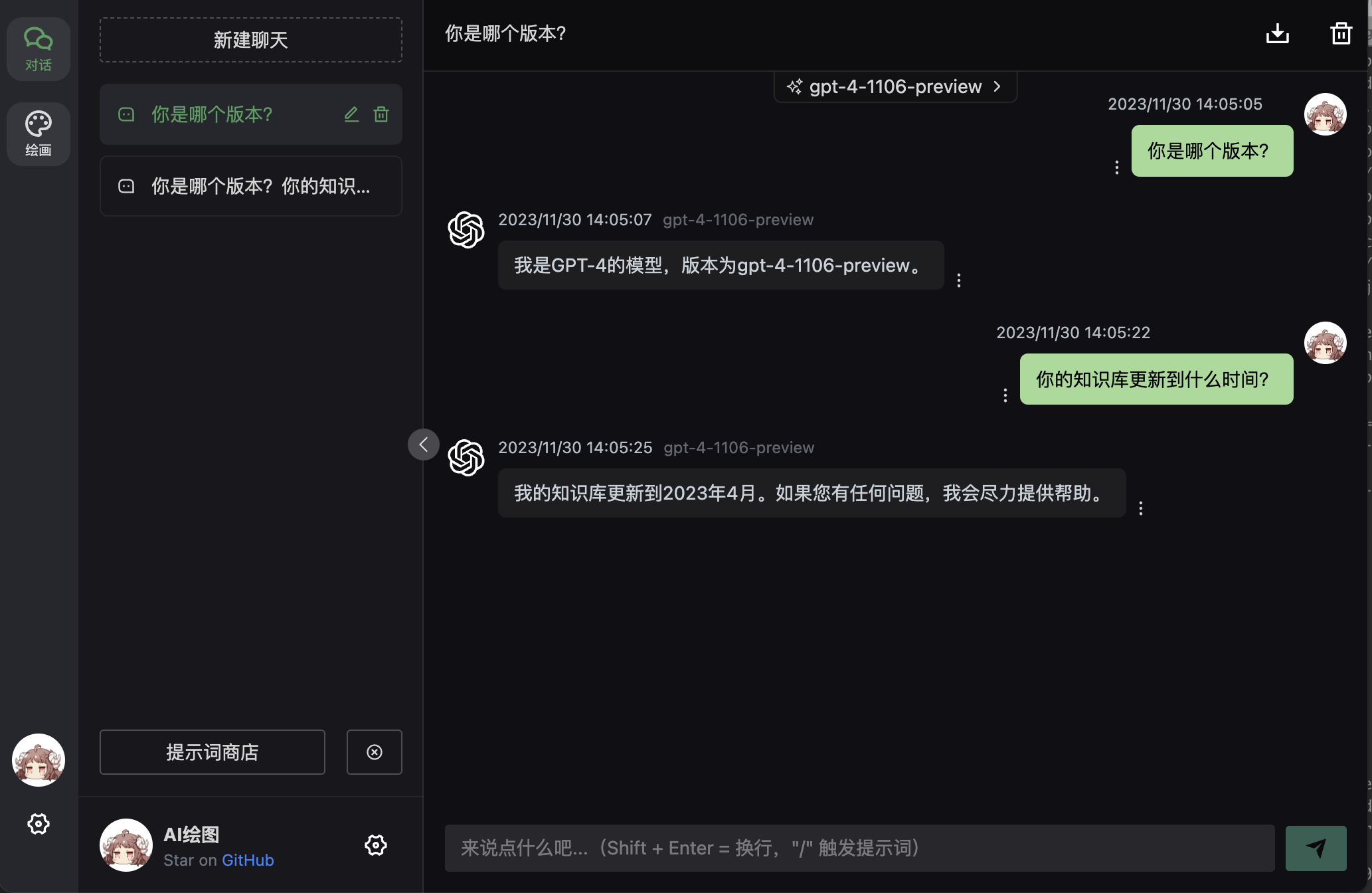

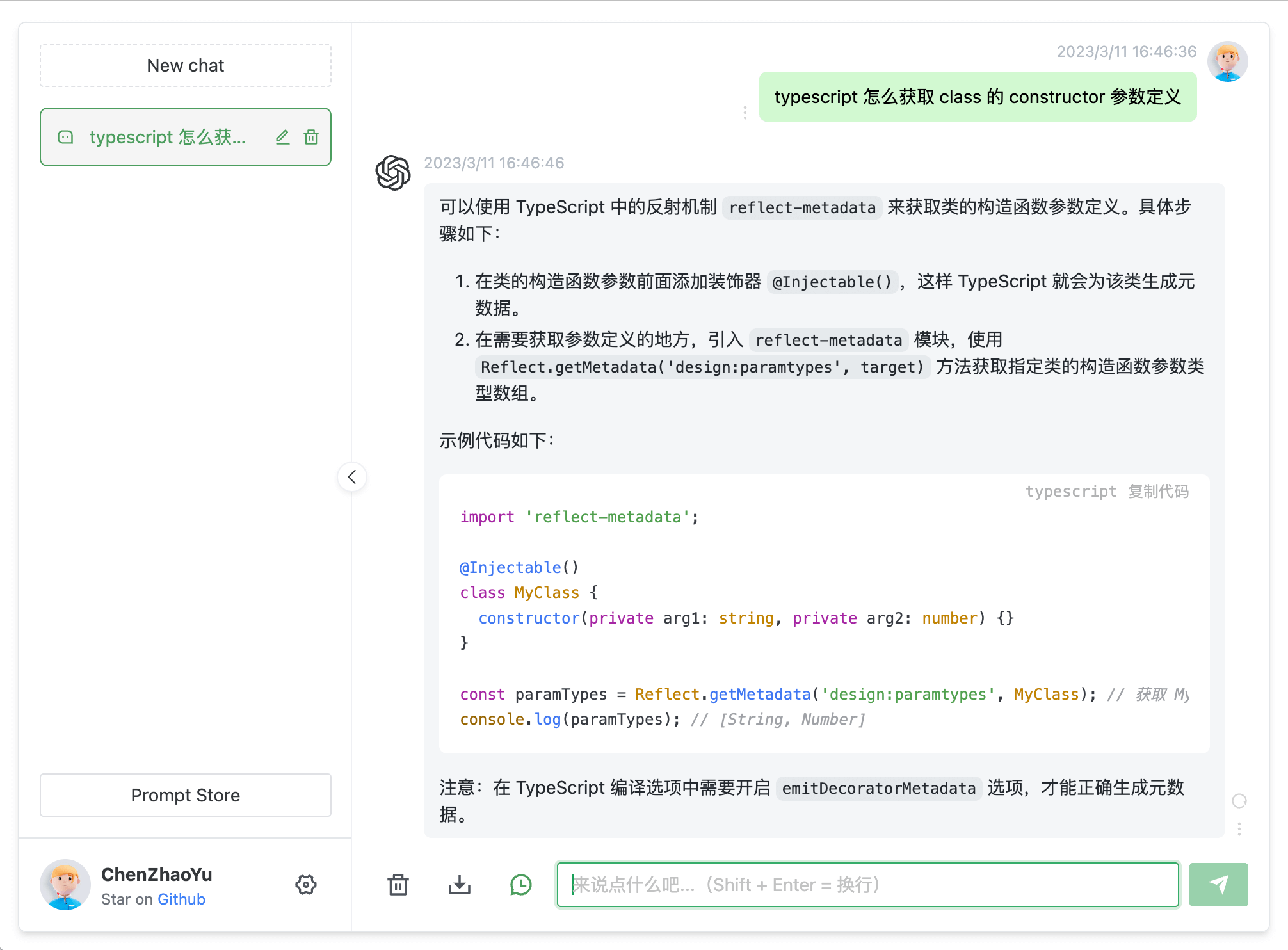

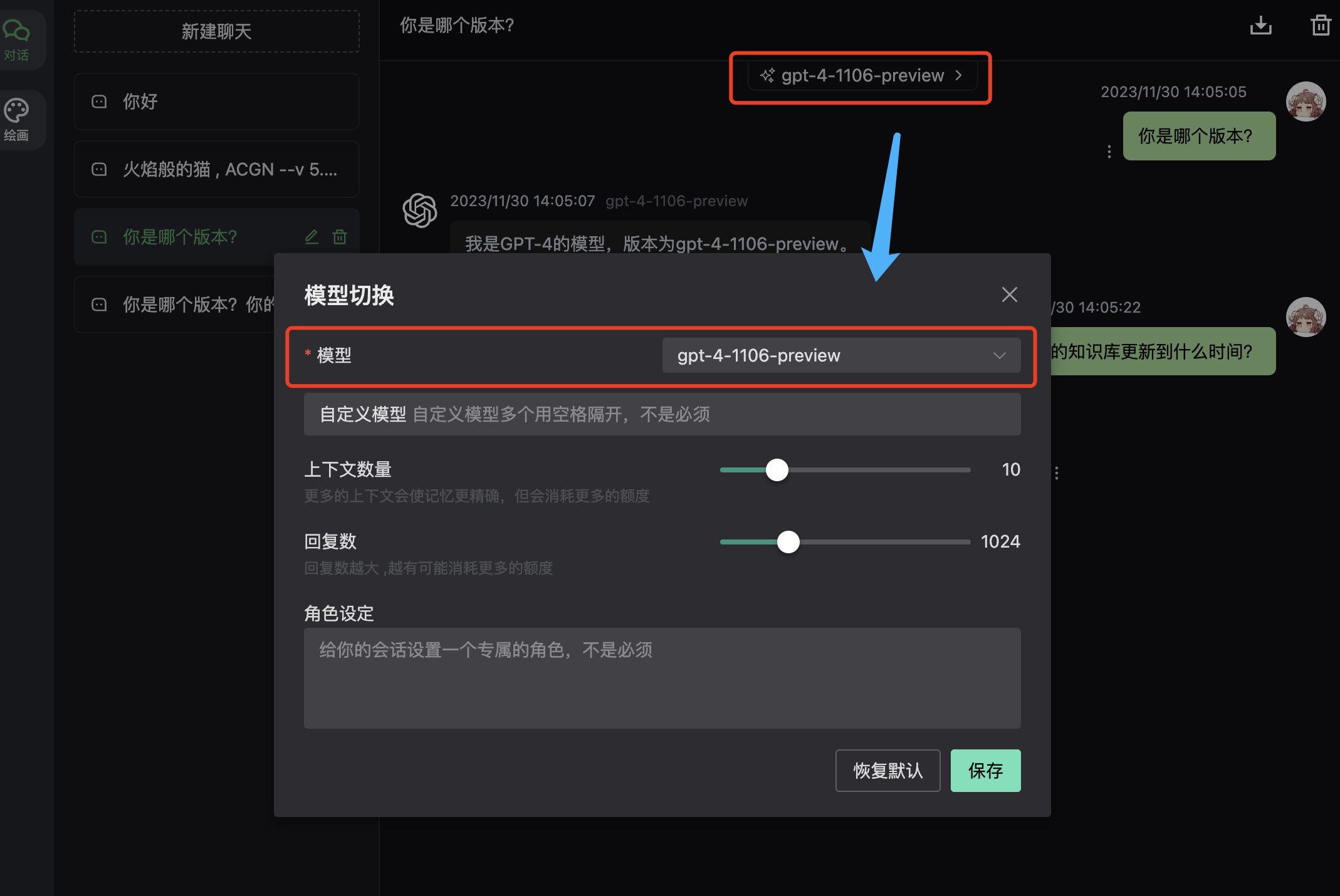

1. ChatGPT Web with Multiple Model Switching

Notes

- Visit https://chat.ddaiai.com/ (if blocked, replace the subdomain 'chat' with 'hi')

2. If you find it's blocked, you can change the address yourself - 'https://hello.ddaiai.com' - replace 'hello' with anything else like 'https://202312.ddaiai.com' and it will still work

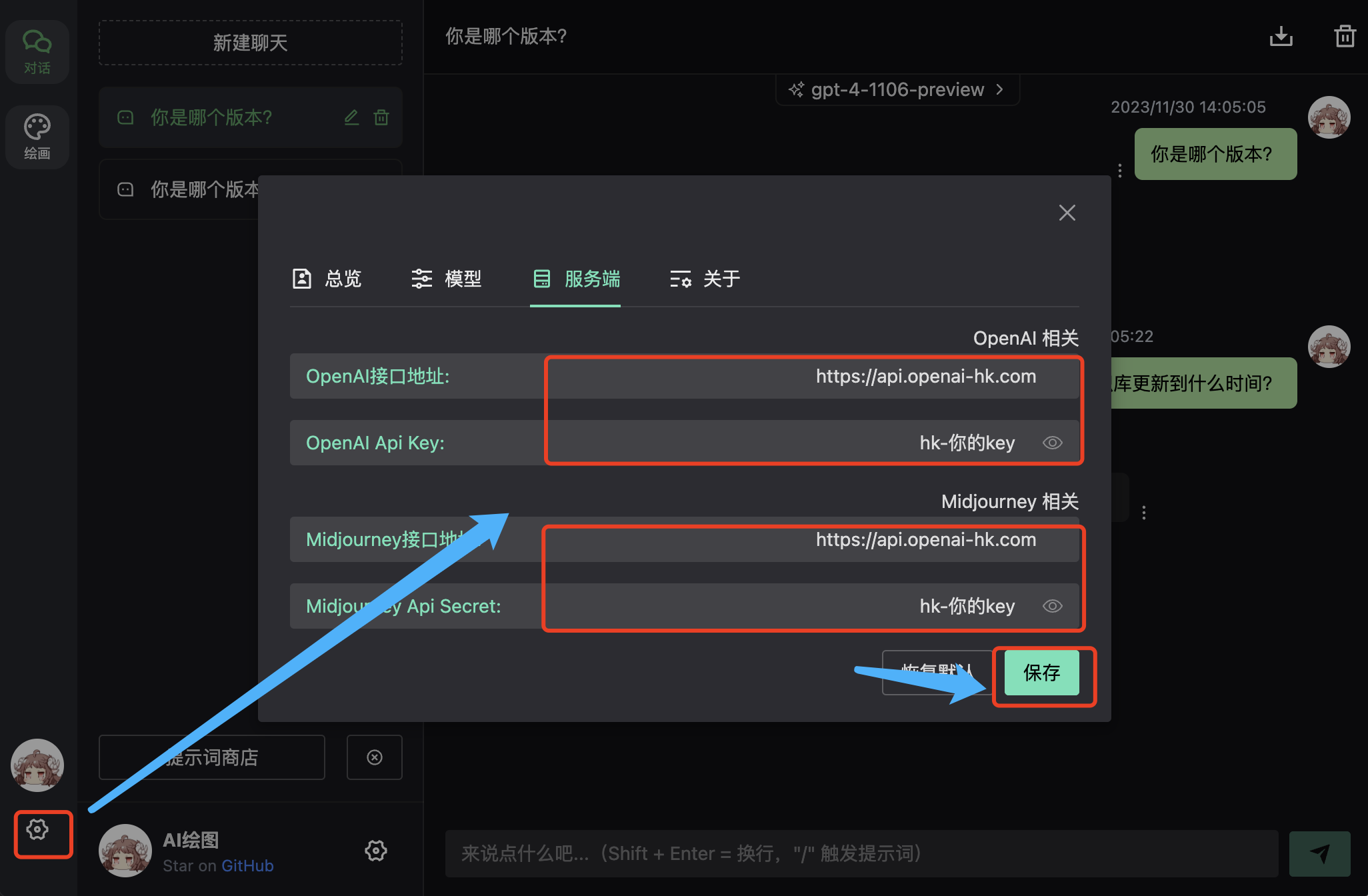

Settings

Then set up the following in the corresponding fields as shown in the image below:

OpenAI API address: https://api.openai-hk.com

OpenAI API KEY: hk-yourApiKey

If you want to generate images, also complete the Midjourney information:

Midjourney API address: https://api.openai-hk.com

Midjourney API Secret: hk-yourApiKeyThen set up the following in the corresponding fields as shown in the image below:

OpenAI API address: https://api.openai-hk.com

OpenAI API KEY: hk-yourApiKey

If you want to generate images, also complete the Midjourney information:

Midjourney API address: https://api.openai-hk.com

Midjourney API Secret: hk-yourApiKey Result

Result

Input box for questions and dialogue

Model Switching Supports custom models, multiple models can be separated by commas

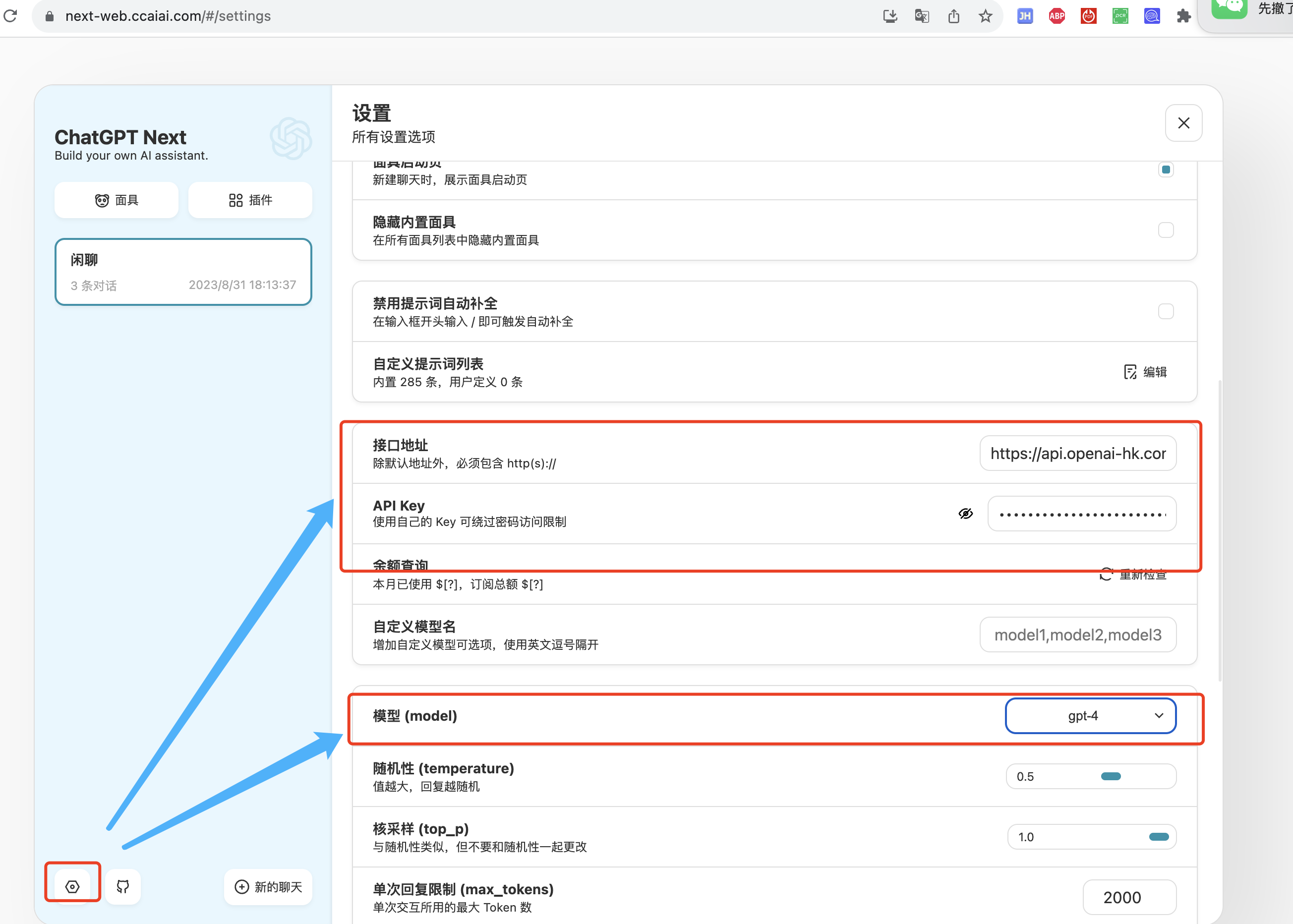

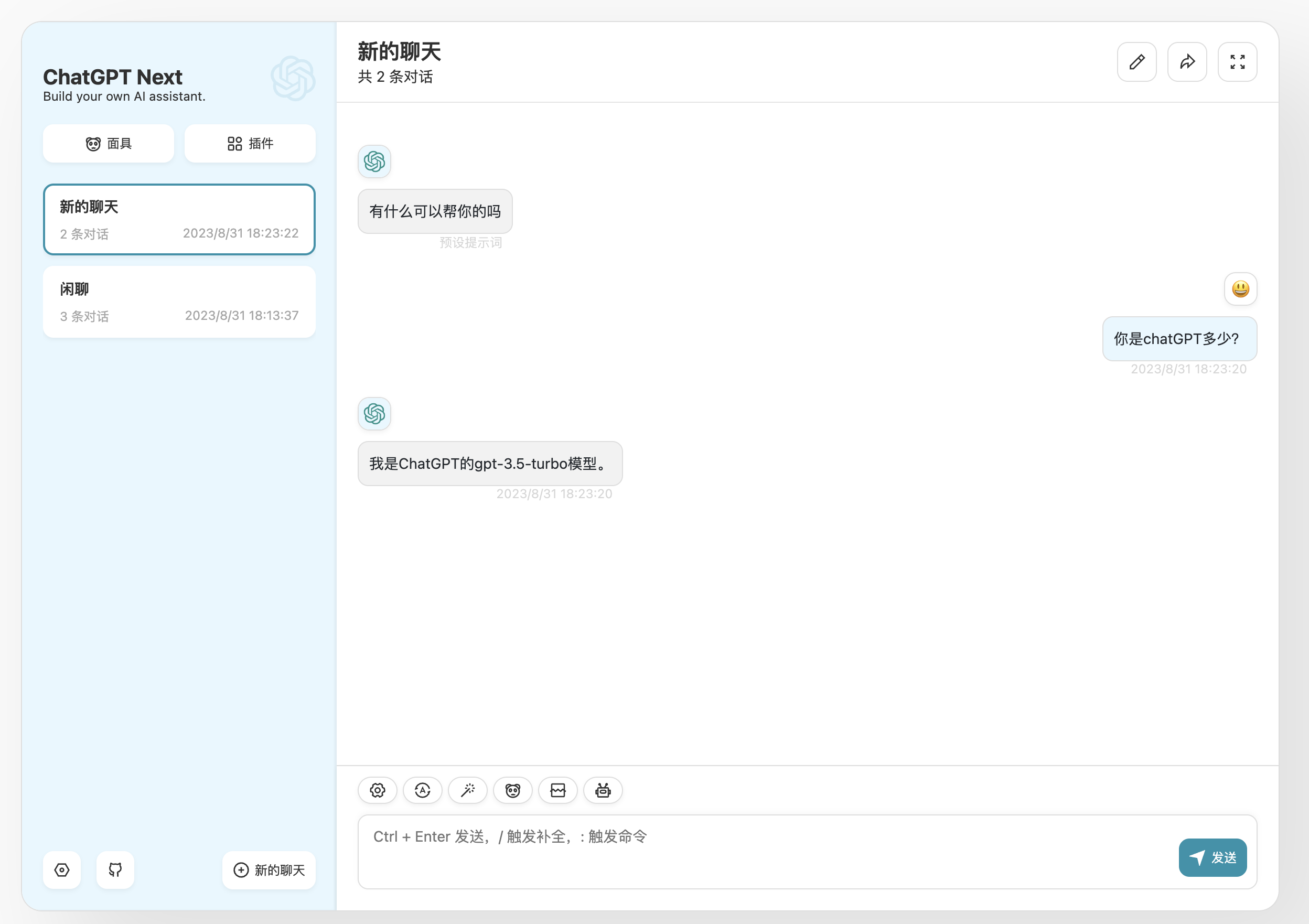

2. Next-Web with Multiple Model Switching

Notes

- Visit https://web.ccaiai.com/ (requires VPN)

2. If you find it's blocked, you can change the address yourself - 'https://suibian.ccaiai.com' - replace 'suibian' with anything else like 'https://abc.ccaiai.com' and it will still work

Then set up the following in the corresponding fields as shown in the image below:

API address: https://api.openai-hk.com

API KEY: hk-yourApiKey

Model: Select GPT-4Then set up the following in the corresponding fields as shown in the image below:

API address: https://api.openai-hk.com

API KEY: hk-yourApiKey

Model: Select GPT-4

Input box for questions and dialogue

OpenAi-HK

OpenAi-HK